This blog post contains a list of questions and answers to refresh your knowledge about Operation Systems.

What is an OS and why it is important?

An OS is a software program that bridges the hardware and the users. It runs on top of the hardware and manages how other software interact with the hardware. It controls memory, file systems, and processes. An OS can be thought of as a translator from users’ actions to machines’ language.

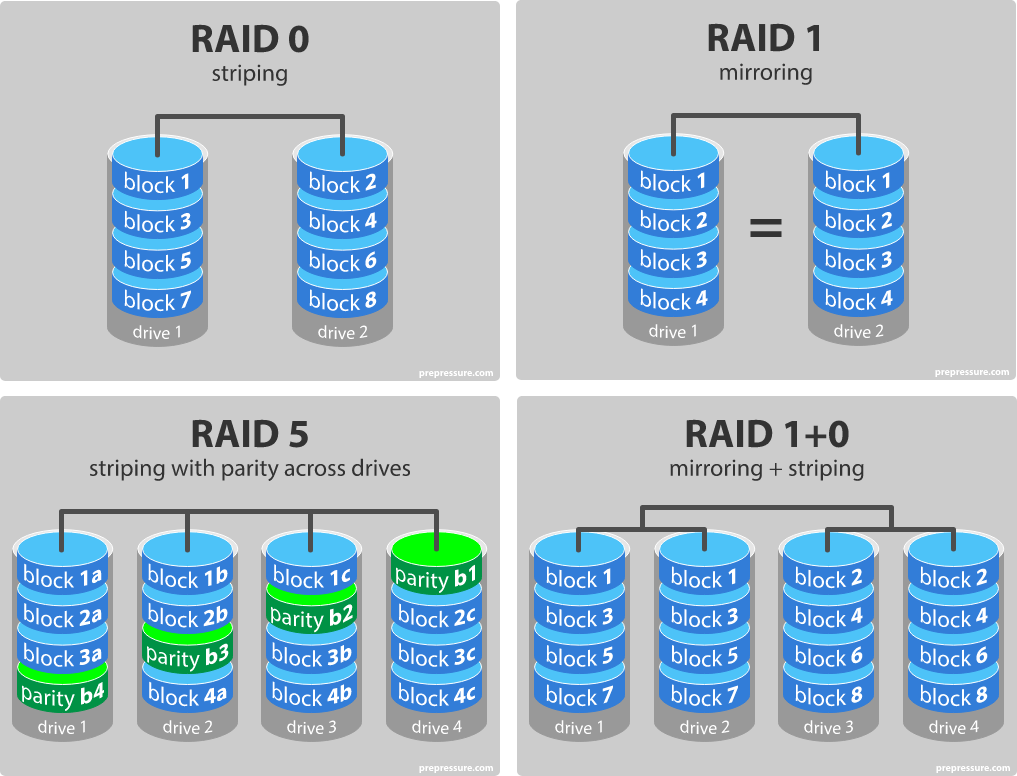

What is RAID? What are the different levels of RAID?

RAID (Redundant Arrays of Independent Disks) is a method to balance data protection and data redundancy. It reduces data loss with the cost of redundancy. RAID systems may store data in multiple disks in an efficient way so that if one or several disks are broken, the lost data can be reconstructed.

The most popular RAID levels are:

- RAID 0: stripping. There is no redundant, so also no fault-tolerance. However, as we strip the disks and store contiguous data in different disks, the I/O performance is superior to no-RAID.

- RAID 1: mirroring. Data is mirrored on all disks, i.e. every disk holds the same data.

- RAID 5: striping with parity. Every of the

ndisks is stripped intomblocks. Then for each row of n blocks, one of those n blocks is reserved as the parity block while the remainingn - 1blocks are used to store data. If one of the disks gets broken, data can still be recovered. - RAID 10: is basically RAID 1 + 0. We have at least 2 clusters of disks, every cluster holds the same data (i.e. mirroring). Inside each cluster, disks are stripped and contiguous data are stored in different disks (stripping).

What is a Pipe?

The pipe is a mechanism to send one-way messages from one process to another. The general idea is that the output of a process serves as the input for another process.

In the command line, a pipe can be created with the | character, e.g. cat text.txt | grep OS will find all occurrences of the word OS in file text.txt.

What is Race condition?

A race condition occurs in a multi-threading environment when one thread attempts to change the data when another thread is reading or changing that same data. That said, there is a race between multiple threads to read and change the common data. The resulting effect is then dependent on which thread is faster.

What is a semaphore? What is a mutex?

Both semaphores and mutexes are used to prevent race conditions.

There are 2 types of semaphores: counting and binary. A counting semaphore is an integer representing the number of available resources. A binary semaphore has only 2 states, 1 and 0, meaning if the data is available for use or not.

A mutex (Mutual Exclusion) is a lock that acts similarly to a binary semaphore. The only difference is that a lock can only be locked and unlocked by the same thread.

Mutexes are more common in general use cases.

What is a bootstrap program in OS?

When we power on the computer, the first program to run is the bootstrap program. It first performs some diagnostic tests and then initializes the OS.

What is demand paging?

Demand paging is a method to use memory more efficiently. That is, pages of data are only loaded to the memory on-demand, i.e. being lazy. This is as opposed to anticipatory paging which predicts what pages will be queried soon and pre-loads them into the memory.

The advantages of demand paging are:

- There is no need of an overhead to load everything into memory at the start.

- Small memory can be used effectively (more processes can run).

When do we need process synchronization?

We need process synchronization when 2 processes are interrelated in some ways. For example, if 2 processes access and modify the same data.

What is IPC? What are the different types of IPC?

IPC (InterProcess Communication) is a mechanism to allow processes to communicate with each other.

There are different approaches to IPC, most notably:

- File: Multiple processes can access and modify a file which is stored on disk.

- Pipe: one process can give its output to another process.

- Shared memory: multiple processes are allowed to access to the same shared memory region.

- Message queue: a data stream that multiple processes can read and write into. A message queue usually uses a socket connection (a socket is characterized by an IP address and a port number) to work across the network (using, for example, TCP or UDP protocol) so that even processes of different computers can communicate.

What is the main and secondary memory?

The main memory (i.e. RAM) stores the data that the CPUs require during execution. The secondary memory (e.g. disk, USB flash) is a warehouse that contains all data and programs we have.

The main memory is much faster. On the other hand, the secondary memory is bigger and non-volatile.

What are overlay and its advantages?

Overlay is a technique that allows running a program that is bigger than our RAM by only loading the parts of the program that the CPUs need. When the CPUs need some other parts of the program, some not-used-anymore parts are removed from RAM to load the on-demand parts.

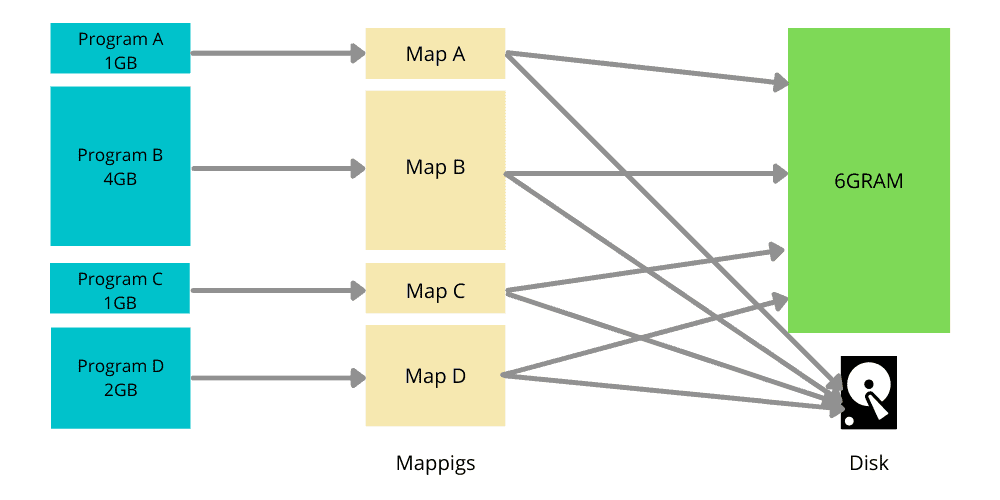

Compare Virtual memory and Swap space?

Virtual memory is a technique of the OS to make it look like we have much bigger RAM than we actually have. It does this by creating a virtual layer on top of RAM and disks. This virtual layer can then map from virtual addresses to physical addresses on either RAM (preferably) or disk (in case RAM is full).

Without Virtual memory, the programs work directly with RAM. With Virtual memory, the programs work with this virtual layer, which can extend the working space beyond RAM into disks.

The Swap space, located on disk, is a space such that when RAM is full, the computer can move some inactive data from RAM onto the swap space. This makes place to load new data onto RAM. We can think of the Swap space as a component of Virtual memory, the space in the disk that virtual memory maps to when the physical memory (RAM) is full.

When a process queries the data that is located in the Swap space, a Page fault is raised and this data needs to be loaded onto the RAM for the process to use.

What are page replacement algorithms and Belady’s anomaly?

Using virtual memory, data is divided into pages and similarly, RAM is divided into page frames (usually of the same size as a page) as placeholders to load pages on. When the RAM is full, some pages need to be removed (from RAM) to make room so that other useful data can be loaded (on RAM). To choose which data to be removed is the use of page replacement algorithms. Some of these algorithms are FIFO, LRU, LFU.

Usually, adding more RAM will result in fewer page faults. However, for some page replacement algorithms (e.g. FIFO), adding more RAM may even increase the number of page faults. This phenomenon is called the Belady’s anomaly.

LRU and LFU are stack-based algorithms. This type of algorithm never suffers from Belady’s anomaly because how they set the priority for a page does not depend on the number of page frames (on RAM). More details on geeksforgeeks.

Explain process and process table

A process is an instance of a running program. When we open an application (e.g. a command prompt, a text editor), a process is created.

The Process table is maintained by the OS to keep track of all running processes, their states and the resources they occupy. In the Process table, each process is stored as a Process control block.

A process can be in one of the following states: new, ready, running, waiting, terminated.

Compare threads and processes

| Thread | Process |

|---|---|

| A thread is a segment of a process. | A process is a whole program. |

| More light-weight. | More heavy-weight. |

| Threads share some memory. | Processes do not share memory. |

| Threads can NOT be distributed over multiple physical nodes. | Processes can be distributed over multiple physical nodes. |

| In some programming languages (e.g. Python), processes are needed to achieve real parallelism. |

Define and name some of the scheduling algorithms

A scheduling algorithm aims to maximize the efficiency of the CPUs when there are multiple processes. It assigns processes to CPU threads, may interrupt one process to run another process if needed.

Some of the most popular scheduling algorithms are:

- First come first serve.

- Shortest job first.

- Shortest remaining time first.

- Round robin.

Compare Paging and Segmentation

Both Paging and Segmentation are techniques to manage memory allocation. With paging, both the main and secondary memory are divided into partitions of fixed size (called pages or blocks). With Segmentation, the size of each segment is not fixed and is chosen by the user.

Explain thrashing

Thrashing is the problem when the computer spends a large amount of time to do swapping and paging rather than computing useful work. This happens if the RAM we have is less than what the active jobs demand.

A short-term solution for this is to kill some of the processes, while a long-term solution is to buy more RAM.

What is multiprogramming?

Multiprogramming is the widely used ability to let one processor handle multiple tasks at the same time. The objective of multiprogramming is to utilize the use of the CPU.

Time-sharing is a logical extension of multiprogramming by switching between jobs so frequently. That is, for example, the processor can execute task A, pause task A, execute task B, pause task B, then re-execute task A, and so on.

What is a zombie process?

A zombie process is a process that is completed or terminated but still exists because the cleaning is not done properly. Zombie processes do not consume resources and don’t do anything, they just exist.

What is an orphan process?

An orphan process is a process that is still running even though its parent process already terminated.

What does cascading termination mean?

Cascading termination means if the parent process is terminated, then its child processes are automatically terminated as well.

Explain process starvation and aging

Starvation is the scenario when a process never gets executed. This can happen, for example, if the scheduling algorithm is Shortest Remaining Time First, in which case a long process can be postponed forever.

Aging is a technique to address the starvation problem. It adds priority to processes that have been waiting for too long. With this, eventually, all processes have a chance to be executed.

Explain deadlocks

A deadlock occurs where multiple threads or processes are waiting for each other in circular to obtain the mutual-exclusive resources they need. Suppose we have 2 threads A and B, each of them needs 2 pieces of data X and Y to run. If currently, A holds X and B holds Y, both threads can not execute because they don’t have all the resources they need. This is a deadlock.

Note that deadlocks can only occur with the condition of No Preemption: the OS cannot force the process to release the resource it holds.

Thus, to resolve a deadlock, 3 common strategies are:

- Terminate one process at a time until deadlock is resolved.

- Take back the resource one process occupies until deadlock is resolved.

- Rollback a state in the past.

Explain Spooling in OS

SPOOL (Simultaneous Peripheral Operations OnLine) can be thought of as a buffering mechanism when the computer cannot complete one request before another one arrives. Take the printer as an example, the printing process is slow, so it is very likely that the printer receives multiple requests at once. These requests need to be buffered so that when the printer completes one request, it fetches another request from the buffer to continue.

What is Kernel in OS?

The kernel is at the core of the Operating system. OS is a combination of Kernel and Shell. While the OS acts as the interface between users and hardware, the kernel acts as the interface between software and hardware, the Shell acts as an interface between users and the Kernel.

Different Linux distros (e.g. Ubuntu, Mint, Fedora) use the same (Linux) kernel.

Explain Banker’s algorithm

The Banker’s algorithm is an algorithm for deadlock avoidance. It does so by simulating the allocation of resources. When a process requests a piece of resources, the OS simulates to see whether granting that data to that process will result in a safe state or not before actually grant.

A safe state is a state such that there exists at least one temporal order in which all processes can execute until completion without resulting in a deadlock.

Compare Logical Address and Physical Address

The physical address is the real address in the memory. The logical address (or Virtual address) is made up by the CPU in the execution time of each program and can be mapped to a physical address. The users only deal with the Logical address, leaving the mapping work to the OS.

The logical address is desirable to have the OS manage the memory securely so that no process can accidentally modify the memory region that belongs to another process.

Explain fragmentation and its types

Data is added to and removed from the memory, which can gradually decrease the efficiency of using the memory because of the fragments created.

There are 3 types of fragmentation:

- Internal fragmentation: This happens when the data size is not divisible by the page size. For example, in a system which uses page size of 4KB, a resource of size 21KB occupies 6 pages, resulting in 3KB of waste.

- External fragmentation: When data is added to and removed from the memory, it is likely that some small fragments of the memory are free but since they are very small, they cannot be used for anything, resulting in a waste.

- Data fragmentation: This usually happens when the memory already suffers from External fragmentation. In this case, a big data chunk needs to be subdivided into small parts to be distributed to non-contiguous page frames in the memory. Since the data is stored non-contiguously, accessing takes more time, i.e. resulting in a waste of processing time.

Usually, we endure the problem of fragmentation to gain speed and simplicity.

Explain Context Switching in OS

This refers to the act of switching processes in the CPU. The currently running process is stopped and saved (so that it can be resumed later), then another process is executed by the CPU.

What is a critical section?

In concurrent programming, when multiple tasks, threads, or processes access a shared resource, this resource is called a critical section. The critical section needs to be executed atomically to prevent unexpected and erroneous behaviors.

References:

- Operating system interview questions, interviewbit.

- Commonly Asked Operating Systems Interview Questions, geeksforgeeks.