Information Gain, Gain Ratio and Gini Index are the three fundamental criteria to measure the quality of a split in Decision Tree.

In this blog post, we attempt to clarify the above-mentioned terms, understand how they work and compose a guideline on when to use which.

In fact, these 3 are closely related to each other. Information Gain, which is also known as Mutual information, is devised from the transition of Entropy, which in turn comes from Information Theory. Gain Ratio is a complement of Information Gain, was born to deal with its predecessor’s major problem. Gini Index, on the other hand, was developed independently with its initial intention is to assess the income dispersion of the countries but then be adapted to work as a heuristic for splitting optimization.

| Test your knowledge |

| |

Before diving into details, it helps to elaborate on the definition of Entropy.

Information Entropy

Information Entropy, or just Entropy, is a measurement of the uncertainty in data. In the context of Classification Machine Learning, Entropy measures the diversification of the labels.

In another point of view, the Entropy measures how hard we guess the label of a randomly taken sample from the dataset. If most of the data have the same label, says, Positive, meaning the Entropy is low, thus we can bet the label of the random sample is Positive with confidence. On the flip side, If the Entropy is high, meaning the probabilities of the sample to fall into the 2 classes are comparable, making us hard to make a guess.

The formula of Entropy is given by:

![]()

where ![]() is the proportion of class i in the dataset.

is the proportion of class i in the dataset.

For example, a dataset with 30 Positive and 70 Negative samples has its Entropy:

![]()

A small issue with this formula is that ![]() is undefined. Thus, when all samples belong to the same class, we would have trouble computing the Entropy. For this case, we assume

is undefined. Thus, when all samples belong to the same class, we would have trouble computing the Entropy. For this case, we assume ![]() . This assumption makes sense since

. This assumption makes sense since ![]() . Also, if an event does not occur, it does not contribute to the disturbance of the data, hence does not affect Entropy.

. Also, if an event does not occur, it does not contribute to the disturbance of the data, hence does not affect Entropy.

On a side note, it is natural to wonder why the Entropy has this formula. Entropy is a measurement borrowed from Information Theory, or to be more specific, Data Compression. The Entropy of a dataset that contains words indicates the average number of bits needed to compress each word of the document. For example, suppose there is a document formed by 4 distinct words, with their proportion being (0.2, 0.1, 0.4, 0.3). The Entropy of this document is, as calculated by the above formula, ![]() 1.85. If the document’s length (the number of words) is n, says 30, then the approximate number of binary bits to encode is

1.85. If the document’s length (the number of words) is n, says 30, then the approximate number of binary bits to encode is ![]() . More on this can be found in Shannon’s source coding theory. As the number of bits to encode a word should not be larger than (approximately)

. More on this can be found in Shannon’s source coding theory. As the number of bits to encode a word should not be larger than (approximately) ![]() , the maximum bound of the Entropy is also

, the maximum bound of the Entropy is also ![]() .

.

Information Gain

We know what the Entropy is and how to compute it. Now it’s time to move on to the splitting criteria. The first one to be examined is Information Gain (IG).

The idea of IG is simple: the more the Entropy being reduced after splitting (that is, the more the dataset being clear after splitting, or says, the information gained by split), the more the Information Gain.

Let’s take an example.

Suppose we have a dataset of our last 100 days which records if we go outside to play or not. Positive (P) means we do go outside, while Negative (N) means we stay at home to study Data Mining.

The Entropy of our initial dataset is:

![]()

We want to make use of this dataset to build a Classification Tree that can predict if we will go out given predictor variables (e.g. the weather, is the day a weekday or weekend, the Air quality index).

To make a split with Information Gain, we need to measure how much information is gained if each of the predictor variables is used for splitting.

Firstly, let’s try using the Weather:

The Entropies of the resulting 2 sub-datasets are:

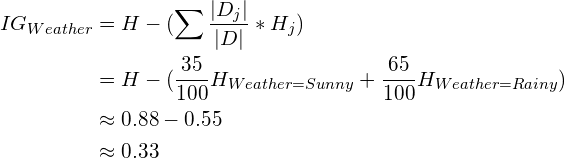

The Information Gain of a split equals the original Entropy minus the weighted sum of the sub-entropies, with the weights equal to the proportion of data samples being moved to the sub-datasets.

![]()

where:

is the original dataset.

is the original dataset. is the j-th sub-dataset after being split.

is the j-th sub-dataset after being split. and

and  are the numbers of samples belong to the original dataset and the sub-dataset, respectively.

are the numbers of samples belong to the original dataset and the sub-dataset, respectively. is the Entropy of the j-th sub-dataset.

is the Entropy of the j-th sub-dataset.

To illustrate, the Information Gain using Weather is:

All predictor variables are computed their splitting Information Gains similar to the process above, then the one with the highest value will be chosen to make the actual split.

…

Although being very useful, the Information Gain has an undesired characteristic, which is to favor the predictor variables with a large number of values. Those highly branching predictors are likely to split the data into subsets with low Entropy values. For example, in the extreme case, the ID code.

The disadvantages of these splits are:

To address this issue, an adjusted version of Information Gain was born, called Gain Ratio.

Gain Ratio

Gain Ratio attempts to lessen the bias of Information Gain on highly branched predictors by introducing a normalizing term called the Intrinsic Information.

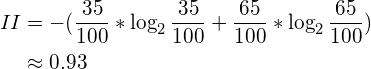

The Intrinsic Information (II) is defined as the entropy of sub-dataset proportions. In other words, it is how hard for us to guess in which branch a randomly selected sample is put into.

The formula of Intrinsic Information is:

![]()

In the above example of splitting using Weather, the Intrinsic Value is:

The Gain Ratio is:

![]()

Plug it to the above example:

For all the predictor variables, the one that gives the highest Gain Ratio is chosen for the split.

Gini Index

The last measurement is the Gini Index, which is derived separately from a different discipline. As we stated from the opening section of this post, the Gini Index (or Gini Coefficient) was first introduced to measure the wealth distribution of a nation’s residents.

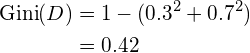

The Gini of a dataset is:

![]()

where ![]() is the proportion of a label.

is the proportion of a label.

The Gini of the above original dataset is:

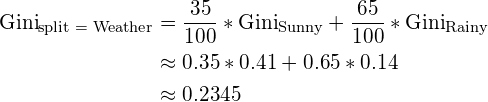

The Gini of a split is computed by:

![]()

where ![]() is the Gini of the j-th sub-dataset.

is the Gini of the j-th sub-dataset.

For the above example with Weather:

For all the predictors, the one that generates the lowest Gini split is chosen.

Comparision

In theory:

Below is a plot from ClementWalter on StackExchange comparing how Information Gain and Gini Index penalize according to proportions. The comparison is based on Binary Classification with values being normalized.

In practice, surprisingly, the performances of split measurements are quite similar, as Laura and Kilian pointed out in their paper, only 2% of the times that Information Gain and Gini Index disagree with each other, so it is really hard to say which one is better.

In terms of time efficiency, it is obvious from the formulas that Gini is faster than IG, which in turn is faster than Gain Ratio. This explains why the Gini Index is usually the default choice in many implementations of the Decision Tree.

| Test your understanding |

| |

References:

- Wikipedia’s page about Entropy: link

- Brillian’s page about Entropy: link

- Wikipedia’s page about Shannon source coding theorem: link

- Notre Dame University’s slides about Information Gain: link

- Wikipedia’s page about Gini coefficient: link

- Hong Kong University’s slide about Gain Ratio: link

- Theoretical comparison between the Gini Index and Information Gain criteria: link

- A question on StackExchange about Gini versus IG: link

Nice! Using this for a project. Thank you very much 🙂 I’ll make sure to cite you, Tung

Thanks, Ravi! I’m glad this helps.

Thank you. This is very much helpful to understand the basics.

It is very helpful. Ta!

nice to learn

thanks! greatly simplified things for me